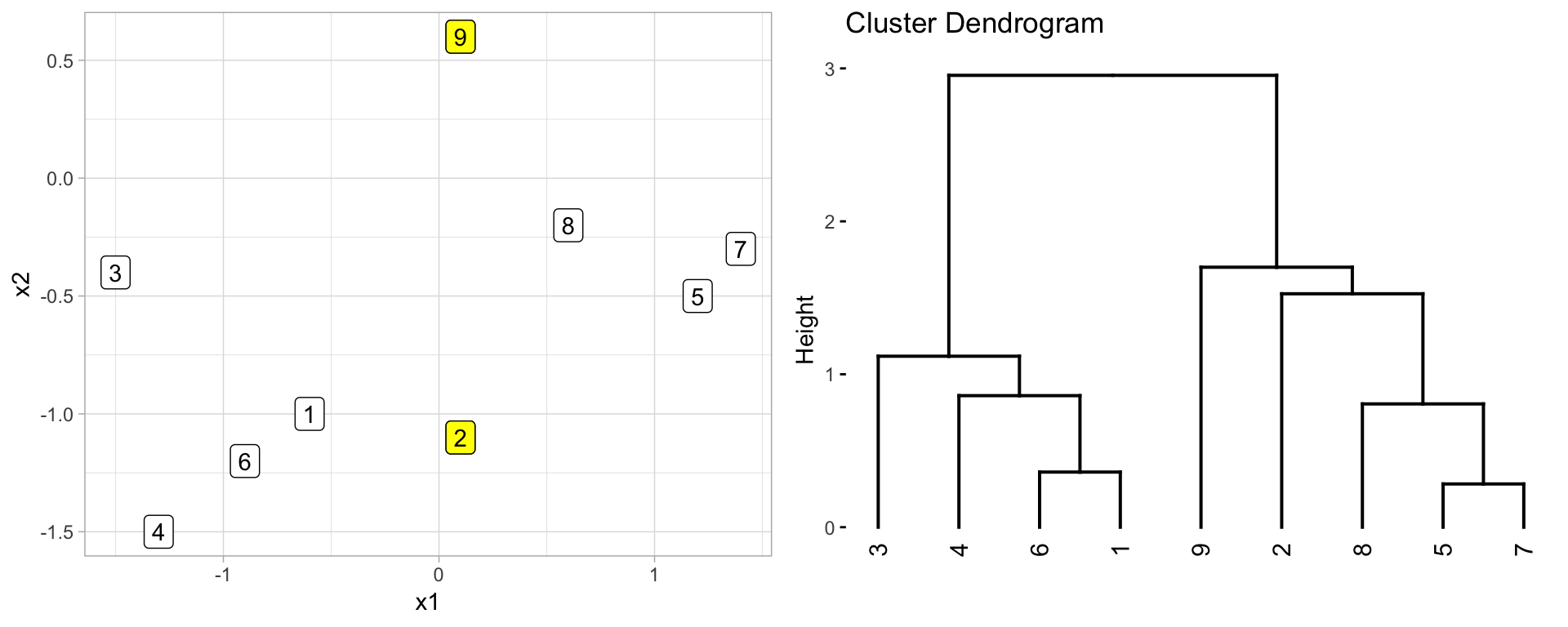

Rows: 30

Columns: 20

$ County <chr> "Tooele", "Uintah", "Wasatch", "Statewide", "Sa…

$ Population <dbl> 59870, 34524, 25273, 2855287, 27906, 1524, 7221…

$ PercentUnder18 <dbl> 35.3, 33.6, 33.1, 31.1, 29.4, 27.8, 23.4, 33.6,…

$ PercentOver65 <dbl> 7.9, 9.1, 9.1, 9.5, 12.3, 23.7, 20.5, 11.0, 10.…

$ DiabeticRate <dbl> 9, 8, 6, 7, 8, 10, 10, 8, 8, 7, 7, 9, 6, 4, 9, …

$ HIVRate <dbl> 37, 21, 28, 111, 22, NA, NA, NA, 72, 36, NA, NA…

$ PrematureMortalityRate <dbl> 347.6, 396.2, 227.2, 286.7, 314.3, 445.8, 293.6…

$ InfantMortalityRate <dbl> 5.0, 5.2, NA, 5.0, NA, NA, NA, NA, 5.8, 7.1, NA…

$ ChildMortalityRate <dbl> 47.7, 57.4, 69.0, 52.9, 61.2, NA, NA, 70.4, 58.…

$ LimitedAccessToFood <dbl> 14, 14, 14, 17, 17, 15, 14, 14, 16, 19, 11, 18,…

$ FoodInsecure <dbl> 7, 5, 1, 5, 4, 41, 16, 8, 6, 12, 0, 14, 4, 4, 8…

$ MotorDeathRate <dbl> 18, 23, 16, 11, 18, NA, 21, 36, 10, 13, NA, NA,…

$ DrugDeathRate <dbl> 18, 13, 17, 17, 16, NA, 26, 18, 20, 18, NA, NA,…

$ Uninsured <dbl> 17, 24, 24, 20, 24, 26, 19, 23, 20, 26, 14, 26,…

$ UninsuredChildren <dbl> 10, 16, 16, 11, 15, 17, 12, 14, 12, 15, 10, 20,…

$ HealthCareCosts <dbl> 9095, 7086, 8327, 8925, 8942, 7824, 8121, 8527,…

$ CouldNotSeeDr <dbl> 13, 12, 13, 13, 13, NA, NA, 13, 11, 13, 13, NA,…

$ MedianIncome <dbl> 61927, 60419, 62014, 57067, 43921, 36403, 43128…

$ ChildrenFreeLunch <dbl> 34, 36, 30, 31, 38, 58, 31, 34, 37, 40, 12, 33,…

$ HomicideRate <dbl> NA, NA, NA, 2, NA, NA, NA, NA, 2, NA, NA, NA, 1…